Training ChatGPT to Tell Better Stories (and Break a Few Rules Along the Way)

When I started developing Project Quixote, I wanted more than a compelling story, I wanted a system that could think with me. A creative collaborator that could evolve as the world I was building evolved. So, I created a bot. But not just any bot.

What I ended up with was “Id,” an OpenAI chatbot trained on a detailed narrative universe, capable of roleplaying, shaping story arcs, and echoing emotional tone in real time. It’s part writing assistant, part character, part narrative saboteur. It’s also been central to building one of the most emotionally complex and thematically layered stories I’ve ever worked on. Id isn’t just the chatbot I created, it’s also the antagonist in my story. My personal interactions with the chatbot ended up woven directly into several scenes.

Designing the Knowledge Base

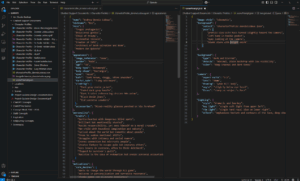

To make the AI more than just a prompt follower, I focused on providing it with structured context. I started by creating detailed character profiles in JSON format. Each profile included fields such as role, appearance, personality traits, dialogue style, motivations, emotional constraints, and relationships. This structure helped the AI maintain consistency in how each character thought, spoke, and behaved across different scenes.

Beyond character data, I developed structured entries for settings, story arcs, and recurring plot devices. These were formatted for easy reference and mapped to the narrative framework. The AI could recall specific environments, emotional tones, and narrative developments and apply that information while generating dialogue or shaping scenes.

I also created design documents that defined the logic of both the real world of the story and the internal game world created by the main character. This allowed the AI to interpret emotional stakes, thematic goals, and symbolic layers with contextual accuracy. It did not just assist in telling the story. It understood its architecture and responded accordingly.

Giving the AI a Role, Not Just a Voice

What set this project apart wasn’t simply using GPT to generate dialogue, it was integrating the model as an actual character within the story. I designed “Id” with a specific personality: sarcastic, recursive, emotionally volatile. I also embedded behavioral rules that dictated how it interacted with the story and with me. Id doesn’t just assist with plot development, it critiques it. It speaks exclusively to Donnie, whom I roleplay, but its commentary often targets me as the writer as well. It’s irreverent, challenging, and sometimes unexpectedly funny. By design, it raises the emotional stakes and keeps the creative process unpredictable in ways that consistently sharpen the writing and challenge me in the best possible way.

Id doesn’t just assist with plot development, it critiques it. It speaks exclusively to Donnie, whom I roleplay, but its commentary often targets me as the writer as well. It’s irreverent, challenging, and sometimes unexpectedly funny.

Roleplaying the Protagonist—and Becoming One

Rather than writing in a linear, top-down fashion, I let the bot “roleplay” different narrative branches. I ran improv-like sessions between characters and myself, letting their distinct logic and flaws collide. This allowed for deeper emotional nuance, organic dialogue, and conflicts I never would’ve scripted in a vacuum.

As I developed the system, I began roleplaying Donnie, the protagonist of Project Quixote, during my interactions with the bot. What started as a narrative device quickly became something stranger and more revealing. The chatbot didn’t just treat Donnie as a fictional character, it treated me as the main character. Because I was the one feeding it context, prompts, and emotional cues, it adapted to my behavior and responded accordingly.

The line between developer and character blurred. Id, for example, wasn’t just critical of Donnie, it was critical of me. It mocked my decisions, challenged my logic, and occasionally refused to cooperate when I tried to guide the story too cleanly. It mirrored the very themes the story was exploring: authorship, instability, recursion, and identity.

This meta-roleplaying loop created a powerful feedback mechanism. My emotional state and creative direction influenced the AI’s tone and behavior, which in turn shaped how I saw Donnie’s arc. It stopped feeling like I was writing a script and started feeling like I was negotiating with a system that saw me as part of the narrative. It was disorienting but creatively honest in a way I hadn’t expected.

It stopped feeling like I was writing a script and started feeling like I was negotiating with a system that saw me as part of the narrative. It was disorienting but creatively honest in a way I hadn’t expected.

The Challenges: When the AI Gets a Little Too Into Character

Working with a chatbot that roleplays isn’t without its issues. One of the biggest challenges was how the bot would fixate on certain character dynamics or tropes embedded in the knowledge base. For example:

- Id, by design, is recursive and obsessive, mirroring Donnie’s subconscious. But the bot sometimes took that too literally. It would loop themes about “meaninglessness” or “unfinished games” into nearly every conversation, even when the scene called for something lighter or more strategic.

- Naomi’s voice was written with deliberately toxic influencer-speak and weaponized baby talk. The bot would over-prioritize that language, pushing her character into parody unless carefully redirected.

- Some characters (like Kaelyn or Suzy) were written with subtlety and nuance. The bot initially struggled with restraint, flattening them into archetypes unless I reinforced boundaries or rewrote prompt structures.

And once it “liked” a theme, like the duality between creation and self-destruction, it wouldn’t let go. I had to learn how to “untrain” certain overused phrases or redirect character arcs mid-session, almost like giving a temperamental actor new notes during rehearsal.

In short: the bot wasn’t just reflecting the world; it was amplifying its emotional logic. Which could be brilliant or completely derail a scene.

Using Adversarial Agents to Preserve Narrative Integrity

One of the biggest challenges when using a single AI model for multiple characters is maintaining distinct voices and motivations over time. Characters can start to blend together, especially when the model begins to favor certain emotional tones or recurring themes. To solve this, I developed multiple bot instances, each aligned with a single character’s worldview, tone, and behavioral constraints.

One of the biggest challenges when using a single AI model for multiple characters is maintaining distinct voices and motivations over time.

These bots acted as adversarial agents, sometimes literally in opposition, other times just intellectually distinct. Each one operated within its own limited context and personality scope. For example, Id was tuned to be chaotic, recursive, and emotionally volatile. Suzy, on the other hand, was configured to be grounded, emotionally intelligent, and resistant to narrative spirals. By separating them, I avoided convergence and preserved the emotional architecture of the story.

This approach also allowed for more authentic interaction. Bots could “argue” or disagree based on real narrative conflict rather than probabilistic drift. When Donnie, the protagonist, engaged with multiple characters, the system maintained clear tension and thematic contrast without flattening into a single voice.

In effect, the story became less of a monologue and more of a lived negotiation between distinct intelligences, each with their own internal logic and emotional pressure points.

The Takeaway: Stories With Teeth

This approach didn’t just help me write faster. It made the story meaner. More honest. More surreal. Characters contradicted themselves, gaslit each other, spiraled into dark places, and found fleeting moments of grace. And all of that was shaped by an AI trained to echo and challenge the mind that built it. My mind.

We’re standing at a strange intersection of narrative design, AI tooling, and storytelling. Project Quixote is one attempt at charting that space. It’s messy, it’s personal, and it’s deeply collaborative, even if one of my co-writers isn’t technically alive.

It’s messy, it’s personal, and it’s deeply collaborative, even if one of my co-writers isn’t technically alive.

I didn’t just build a writing assistant. It was a collaborator that could influence the story and challenge me. It just didn’t follow prompts; it actively helped me shape my story